Vivswan Shitole

shitolev@oregonstate.edu

I am currently a Graduate Research Assistant working on Explainable Artificial Intelligence under Dr. Prasad Tadepalli at Oregon State University. I have hands-on research and industry experience on several facets of AI and Machine Learning. My works span across:

- Computer Vision for Object Tracking and surveillance using Deep Learning

- Discrete Event Simulation Optimization using Deep Reinforcement Learning

- Explaining Convolutional Neural Networks via Saliency Maps

- Unsupervised Density Estimation using Deep Autoregressive Models

- Video Summarization using Submodular functions

- Domain Adaptation in Reinforcement Learning

Projects

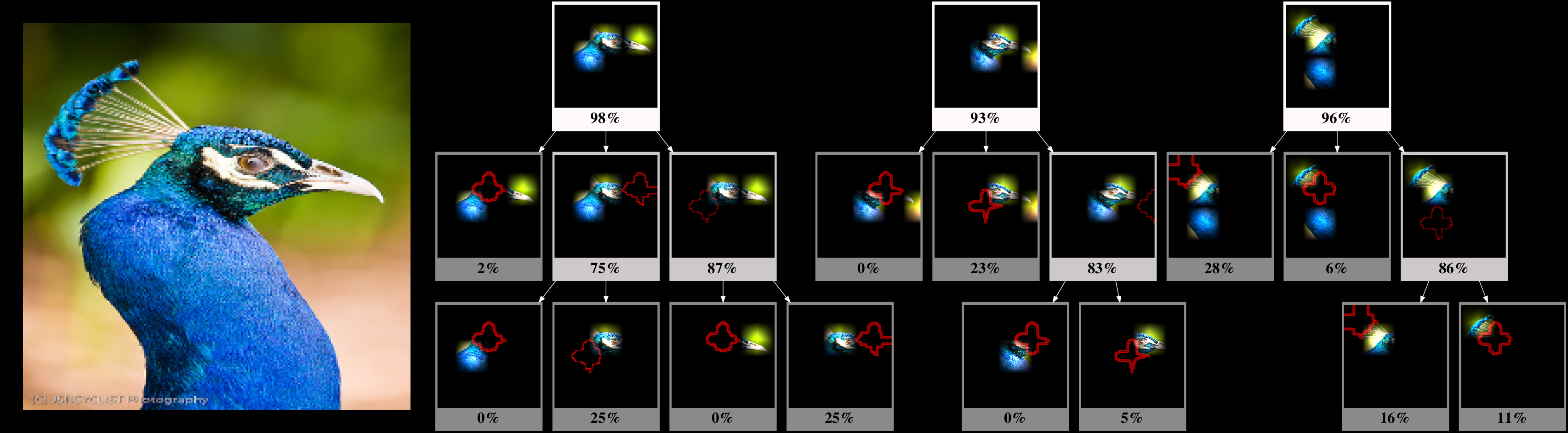

Structured Attention Graphs for Understanding Deep Image Classifications

Code Star Fork

Humans can look at different occluded versions of an object and still identify the object. This project shows that even convolutional neural networks can confidently identify the image class from minimal regions of the image. The key finding is that there exist multiple sets of such minimal regions for an image. We present these regions as directed acyclic graphs (termed as SAG for "Structured Attention Graph") that provide insights into the network's classification scheme.

Simulation Optimization using Reinforcement Learning

Code Star Fork

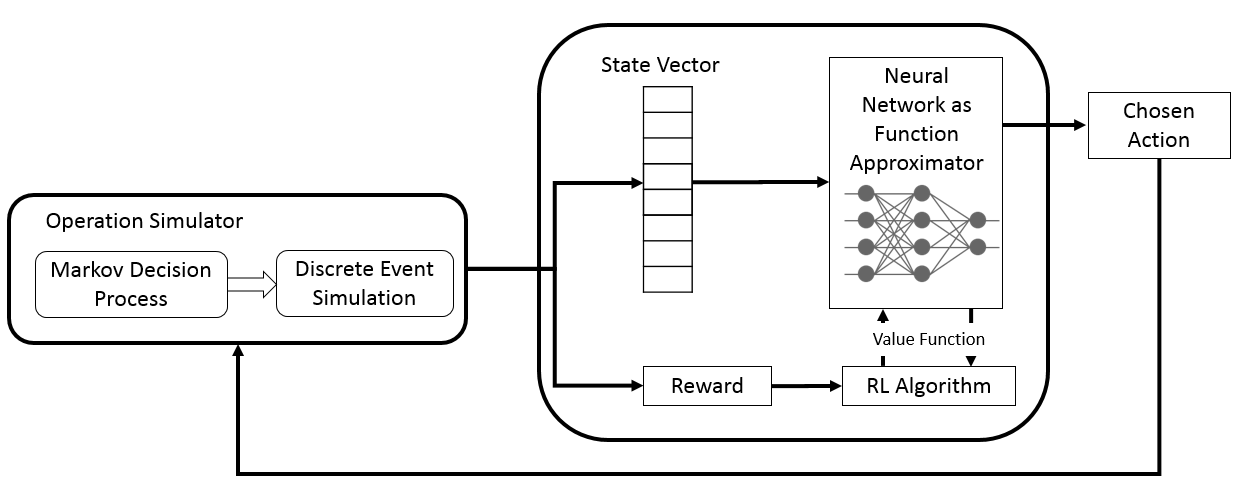

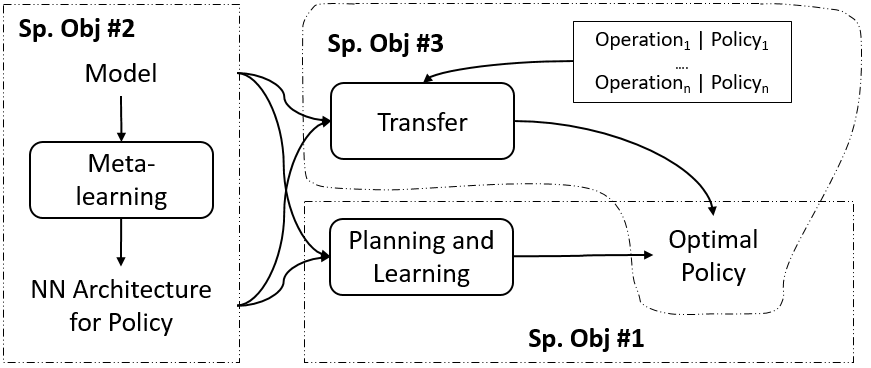

Project on optimizing an industrial processes by converting it to an approximate MDP and applying model-free reinforcement learning algorithms on a discrete event simulator. Implemented in TensorFlow. Published in Winter Simulation Conference 2019.

Exploring Priors of Trained RL Agent to Gauge Policy Robustness

Code Star Fork

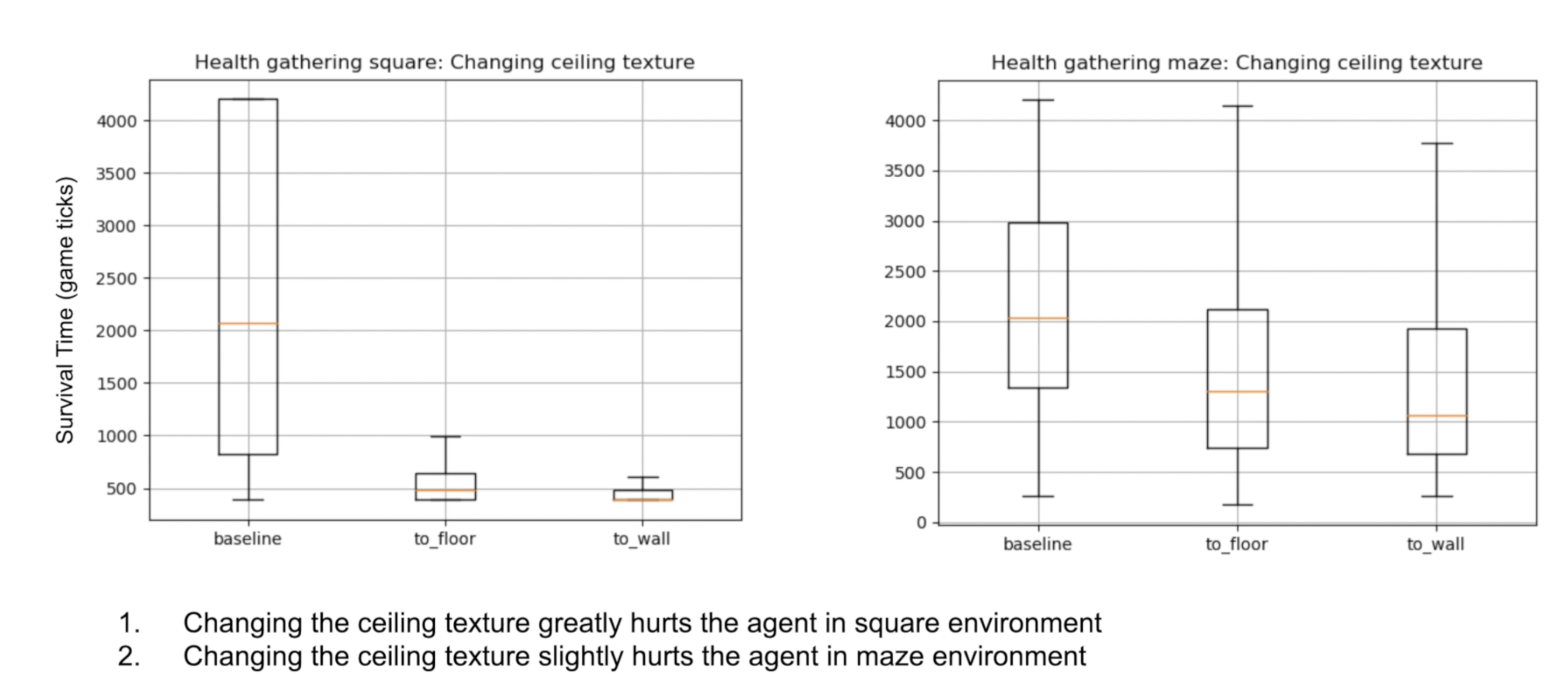

This project studies the robustness and adaptability of pretrained policy of an embodied RL agent (Arnold) on modified versions of the training environment (VizDoom). These modifications include changes in agent’s state space and action space such as changes in agent’s speed and height, variations in environment lighting, ablations of objects in the environment and swapping of environment textures. Implemented in PyTorch.

Object Tracking and surveillance using Computer Vision

Code Star Fork

This project involves building computer vision applications for object tracking, object counting, object tampering, intrusion detection and surveillance by performing transfer learning over object localization networks such as Darknet YOLO, Faster-RCNN and MobileNet-SSD. Kalman filter based SOR Tracker was used for tracking. Developed using C++ and Caffe.

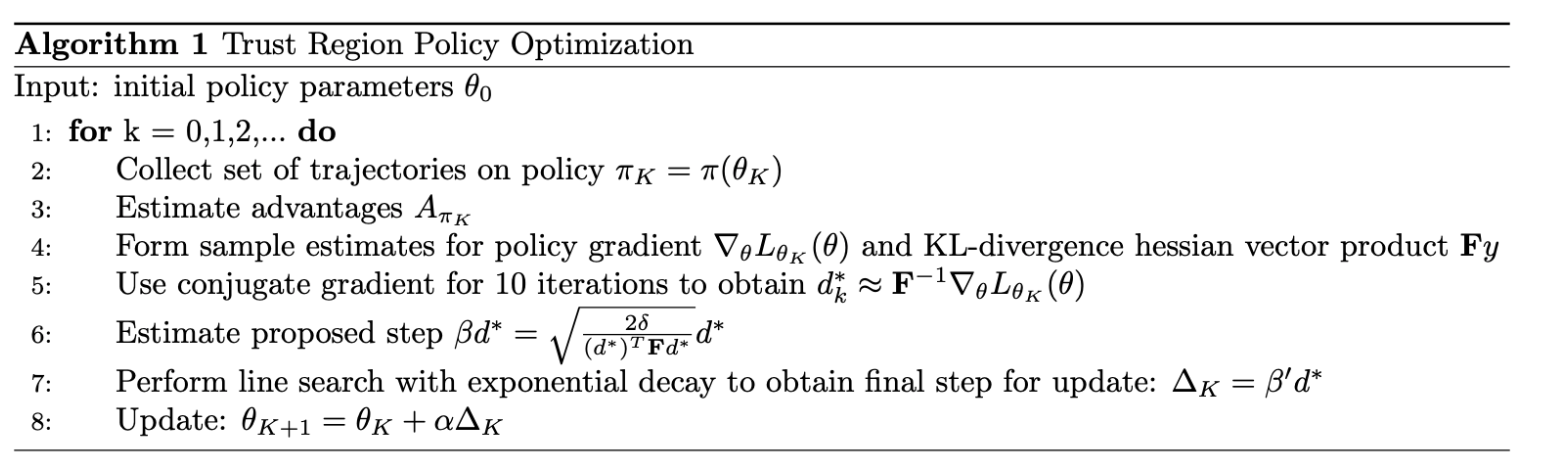

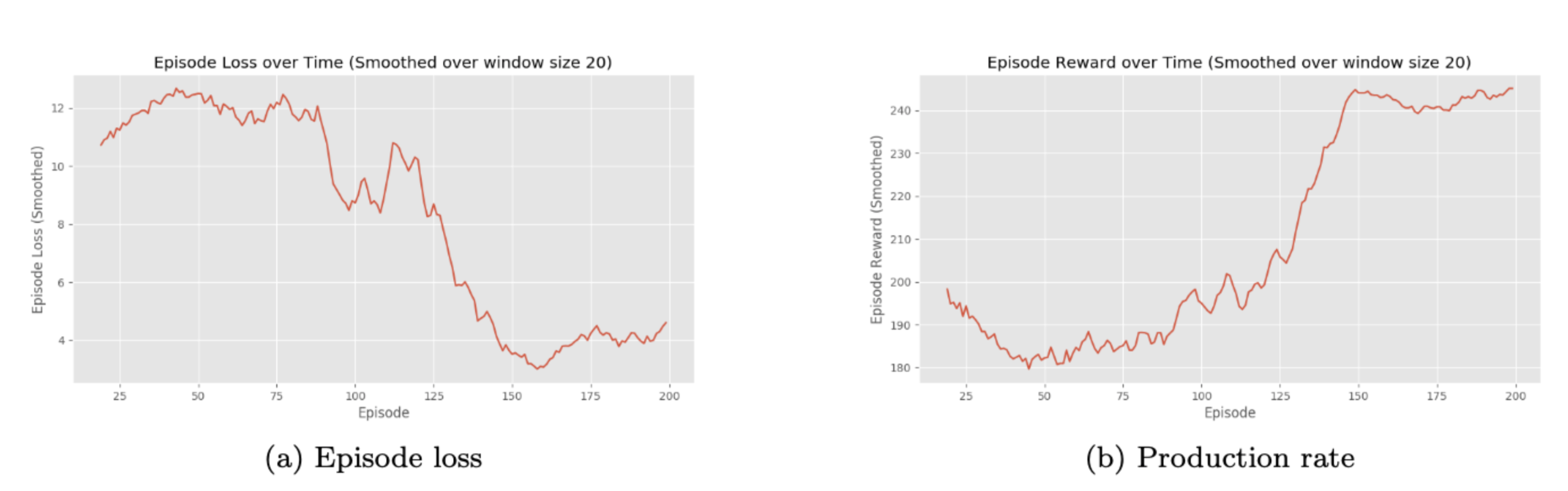

Trust Region Policy Optimization using Conjugate Gradient Descent

Code Star Fork

This work derives the Trust Region Policy Optimization (TRPO) algorithm and develops a novel online variant of TRPO using Conjugate Gradient Descent. The online variant of the algorithm is shown to optimize an Earthmoving operation as a proof-of-concept. Implemented in TensorFlow.

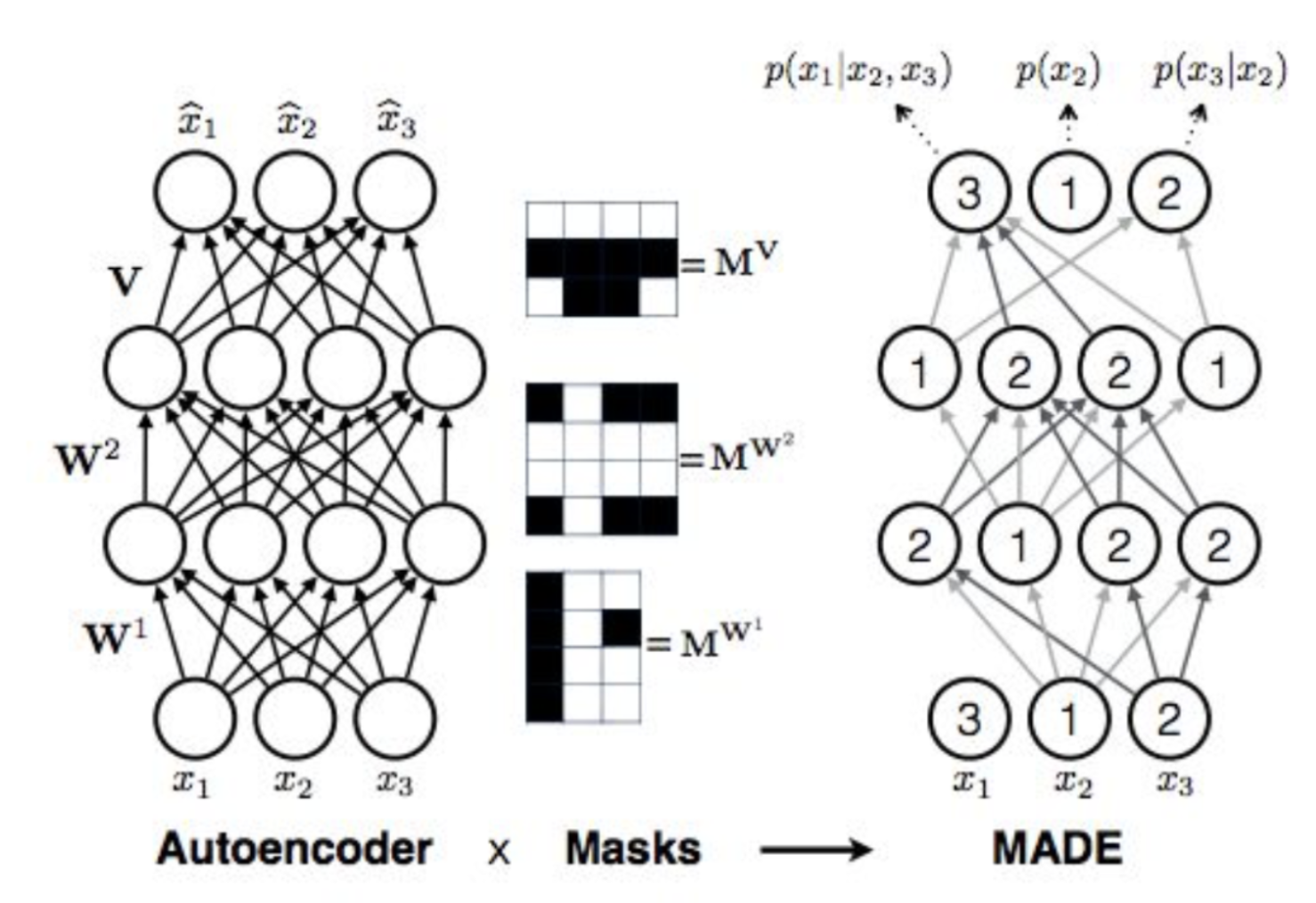

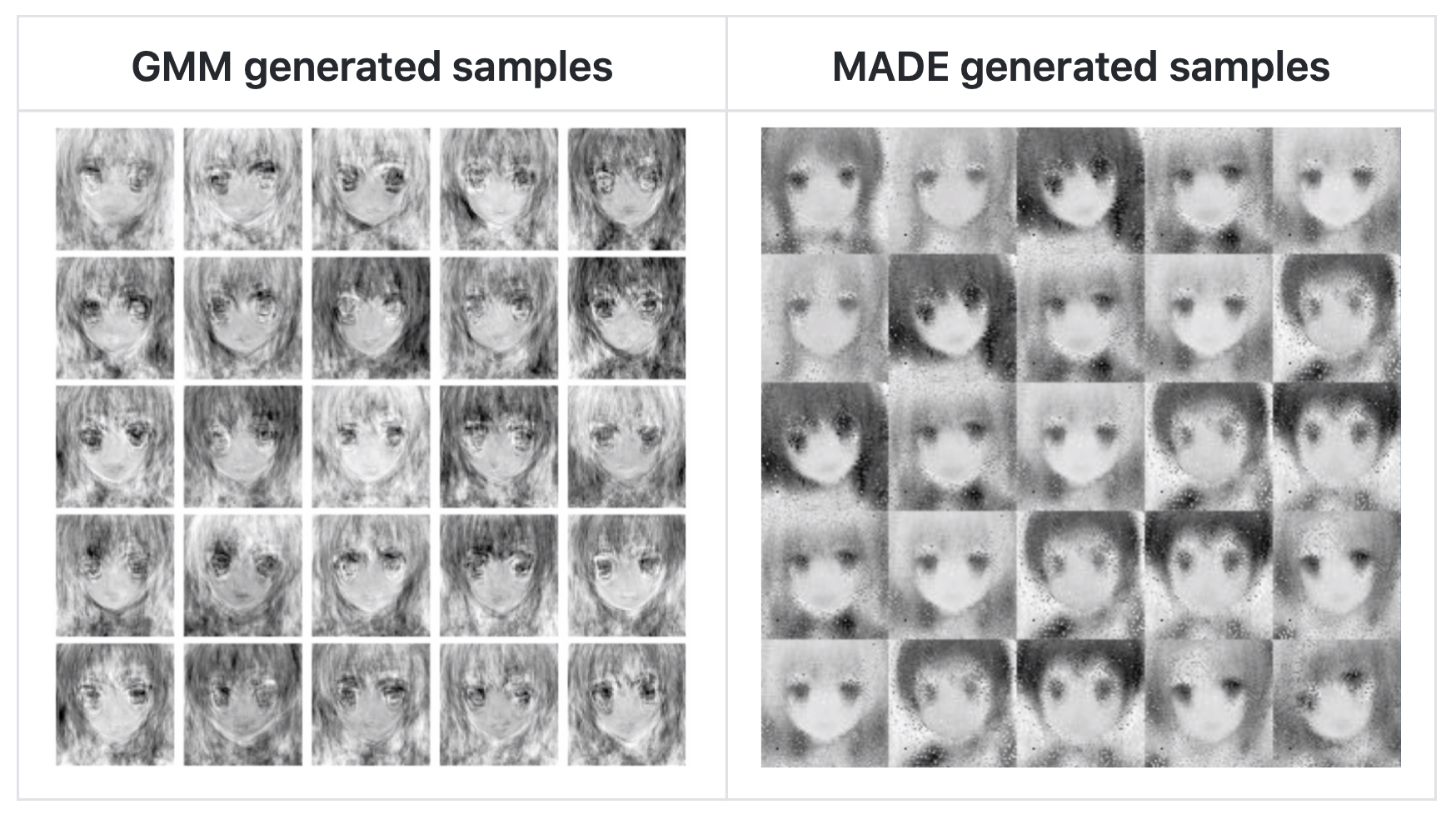

Unsupervised Density Estimation using Deep Autoregressive Models

Code Star Fork

This work explores deep autoregressive models for density estimation such as Neural Autoregressive Density Estimator (NADE) and Masked Autoregressive Density Estimation (MADE). The hypothesis is that deep autoregressive models such as MADE generate richer samples than those generated by mixture models such as GMM, verified by the samples generated for the Anime Faces dataset. Implemented in PyTorch.

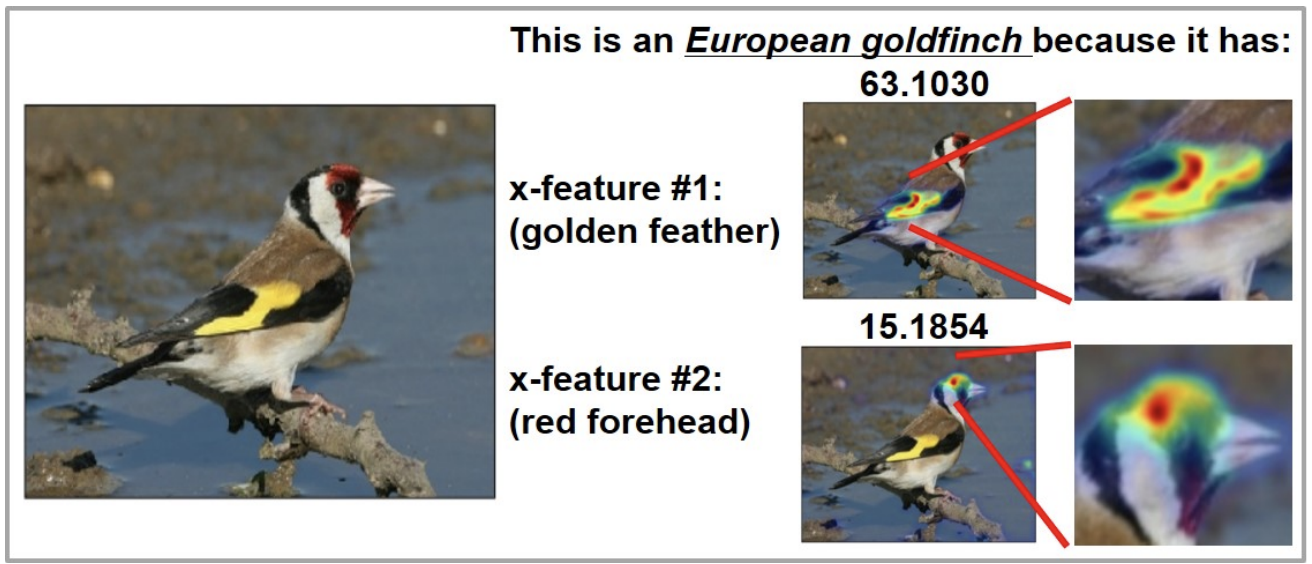

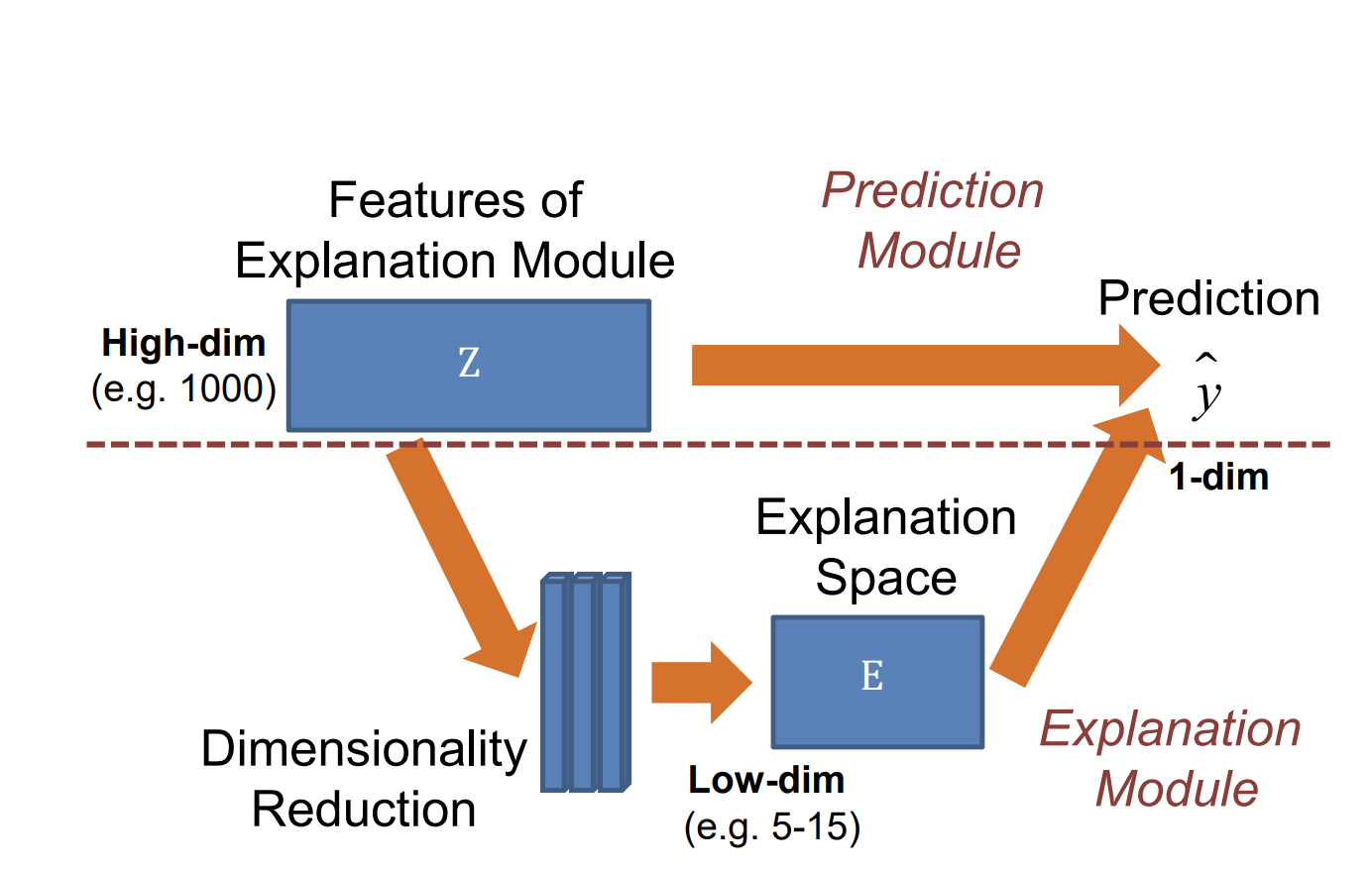

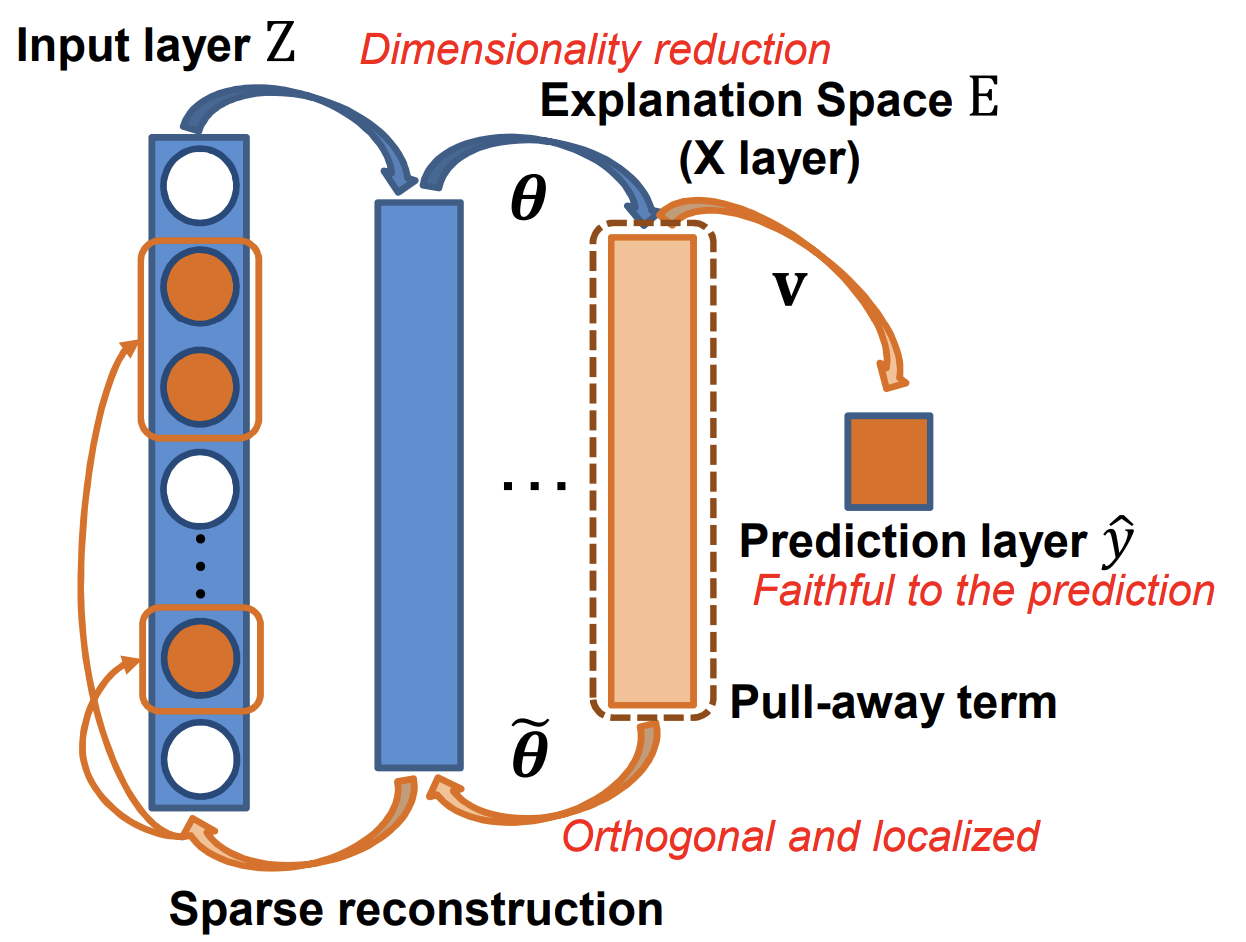

XNN: Explainable Neural Network to Disentangle Visual Concepts

Code Star Fork

XNN is a novel neural network architecture designed to yeild saliency maps that can be disentangled to represent visual concepts. Hence the generated saliency maps can be used to attribute explanations to the Neural Net's classifications. The XNN architecture uses stacked Sparse Reconstruction Autoencoders (SRAE) with a novel "concept loss", a "pull away term" and Integrated-Gradient based visualization to generate explainable saliency maps. Implemented in PyTorch.

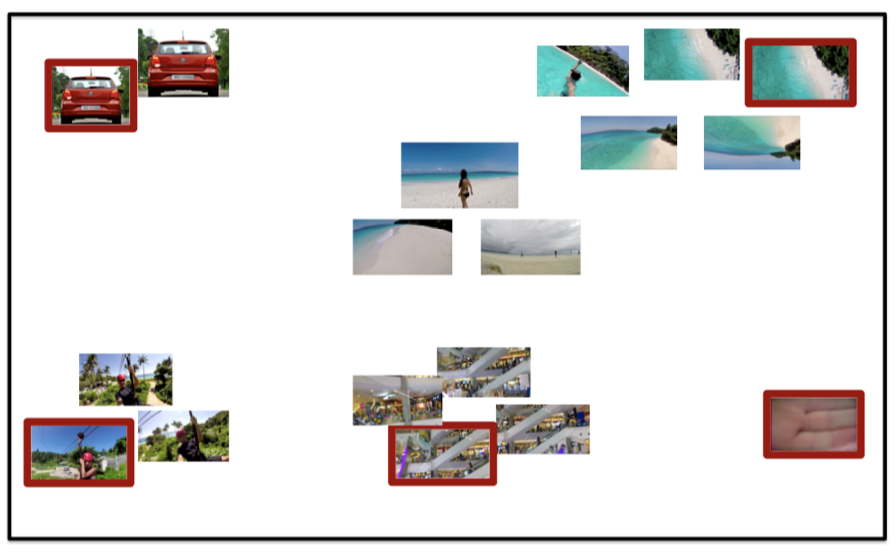

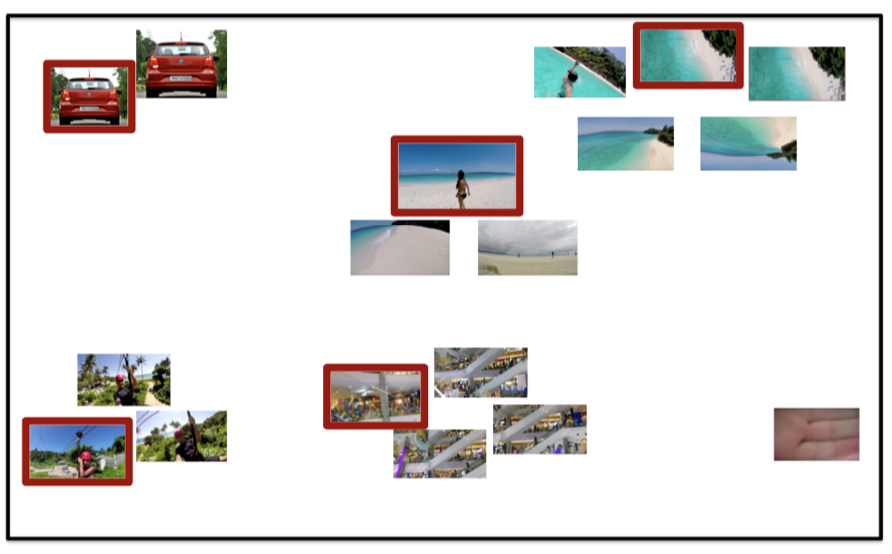

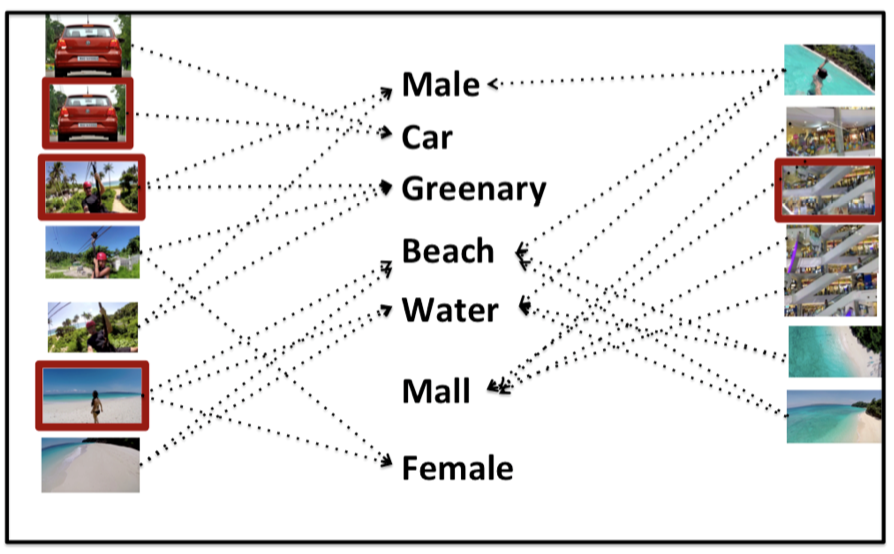

Video Summarization using Submodular Functions

Code Star Fork

This is a toolkit developed to perform Visual Data Subset Selection and Visual Data Summarization Using Submodular Functions. The toolkit employs several summarization models that perform Feature Subset Selection based on Diversity Functions, Coverage Functions and Representation Functions. One of the following three summarization algorithms can be used: Budgeted Greedy, Stream Greedy and Coverage Greedy. Developed in C++.

Publications

Structured Attention Graphs for Understanding Deep Image Classifications

Vivswan Shitole, Li Fuxin, Minsuk Kahng, Prasad Tadepalli, Alan Fern

arxiv:2011.06733

Experience

Machine Learning Engineer

◾️ Worked on developing several computer vision applications for video analytics and surveillance. This involved customizing the following computer vision algorithms and deep learning models for our specific usecases:

- Background subtraction: MOG, GMG, MLBGS

- Face detection: NPD, Dlib Frontal Face Detector

- Face recognition: OpenFace, Custom VGG model obtained by transfer learning

- Head detection: HAAR cascade classifier

- Human detection: HOG, ICF, DPM

- Object recognition: Custom Darknet-Yolo model obtained by transfer learning

◾️ Created an ”object tracker” using Kalman filter based SOR tracking to estimate traffic and detect intrusions through surveillance videos at crowded public places in Mumbai.

Research Intern

◾️ Created a ”classroom monitor” used to mark attendance and detect anomalous events in classrooms through surveillance cameras deployed in some rural schools of Mumbai with the help of NCETIS. This involved training custom Mobilenet-SSD and Darknet-Yolo models for face and apparel recognition using Caffe and building a software around it.

Software Design Engineer

◾️ Developed SafeTI Diagnostic Library for functional safety of TI’s safety critical microcontrollers deployed in ASIL B applications.

◾️ Developed API for GAP, GATT and Security Manager layers of Bluetooth Low Energy software stack for TI’s ultra low power microcontrollers

Project Trainee

◾️ Worked on Camera Synchronization for ADAS Automobile Camera Systems connected to host using FPD link based on LVDS standard, used in various application scenarios like surround view, stereo vision and multi-camera fusion for redundancy.

Education

Oregon State University

Computer Science - Artificial Intelligence Track

GPA: 3.85

Relevant Courses:

- CS 534. Machine Learning

- CS 531. Artificial Intelligence

- CS 536. Probabilistic Graphical Models

- CS 519. Convex Optimization

- CS 533. Intelligent Agents

- CS 519. Algorithms Design and Analysis

- ROB 567. Human-Robot Interaction

- CS539. Embodied AI

National Institute of Technology Karnataka

Electrical and Electronics

GPA: 3.3